Synthetic Ecology (Model V01)

By Nikzad Arabshahi

Visual Installation

Koninklijke Academie van Beeldende Kunsten, The Hague, The Netherlands, May 2022

About

Alife (Artificial Life)

Artificial life (also known as ALife) is an interdisciplinary study of life and life-like processes. Alife attracts researchers and creative thinkers from different disciplines such as computer science, physics, biology, chemistry, economics, philosophy. Artificial Life, as defined by Chris Langton, an American computer scientist and one of the founders of the field of artificial life, is a field of study devoted to understanding life by attempting to abstract the fundamental dynamical principle underlying biological phenomena, and recreating these dynamics in other physical media, such as computers, making them accessible to new kinds of experimental manipulation and testing. (Bedau, M. A. 2008). Langton made numerous contributions to the field of artificial life, inspired by ideas coming from his background in physics, especially phase transitions, he developed several fundamental concepts and quantitative measures for cellular automata and proposed that critical points separating order from disorder could play a crucial role in shaping complex systems, particularly in biology.

Among the several topics that comprised the Alife study, the most important qualities concentrate on the essence of living systems rather than the contingent features. While biological research is essentially analytic, trying to break down complex phenomena into their basics, Alife is synthetic and it endeavors to have a deeper understanding of the living system by constructing phenomena from their elemental units and artificially synthesizing simple forms of life. Although the major goal of synthesizing simple systems is to generate a very life-like living system, still they are very unfamiliar with what’s known as life. As such artificial life complements traditional biological research by constructively exploring the boundaries of what is possible for life, in order to explore new paths in the quest toward understanding the grand, ancient puzzle called life. Artificial life uses three different kinds of synthetic methods to study life. The Soft Alife creates computer-based simulations/synthesizes or any other purely digital construction that demonstrates life-like behavior. The Hard Alife produces hardware implementations of life-like systems. And Wet Alife involves the creation of life-like systems in a laboratory condition using biochemical materials.

Contemporary artificial life is very vigorous and diverse and although the core of my research related more to the modality of the emergence and evolution of Soft ALife entities, I needed to look more profound into this topic to have better insight into each phase of my project. Current researches on contemporary Soft, Hard, and Wet artificial life disparately stretch the essence/meaning of individual cells, whole organism, and evolving population to the point that it raises and informs several philosophical concerns on such things as emergence, evolution, life, mind and the ethics of creating new forms of life from scratch. But before characterizing the complexities of these philosophical issues, it is essential to shedding more light on what artificial life historically looking for by briefly reviewing the history and methodology of the ALife.

History and Methodology of Artificial Life

Looking to create living creatures and discovering the causes of life go back to the dawn of history. For instance, we can find the essence of pursuing artificial creatures in the Greek, Mayan, Chinese, and Jewish mythologies, where humans believed in the divine ability to create living creatures through magic. Other examples can be found during the Middle Ages, such as the automata created by Al-Jazari who invented water clocks that were driven by both water and weights and the legendary Albertus Magnus’s brazen head. Later on, during the Italian Renaissance, Leonardo da Vinci made the mechanical knight, a humanoid that could stand, sit, and raise its arms. He also made the mechanical lion which could walk forward and open its chest to reveal a cluster of lilies (Mazlish, B. 1995). Through the modern age, automata became more and more sophisticated, based on the advances in clockwork and engineering (Wood, G. 2002). One of the most impressive examples of this era was made by Jacques de Vaucanson, a French inventor and artist who built the first all-metal lathe which was influential to the industrial revolution. A lathe is a mechanical tool that rotates a workpiece/object in the axis of rotation to perform different operations such as sanding, drilling, deformation, cutting, knurling (Knurling is a manufacturing process to apply/pressing a straight, angled, or crossed lines pattern/texture into the material) with special tools that are designed to create a symmetric object. Vaucanson lathe was the first machine tool that led to the invention of other machine tools. He was accountable for the creation of impressive and innovative automata. He also was the first person to design an automatic loom. Even though his first android was considered profane, and as a consequence, his workshop was destroyed by the authority. He did not stop innovation and later he built a duck that occurred to eat, drink, digest, and defecate. Other examples of modern automata are those created by Pierre Jaquet-Droz: the writer (made of 2500 pieces), the musician (made of 2500 pieces), and the draughtsman (made of 2000 pieces).

Questions related to the nature and purpose of life have been central to philosophy for years, and the quest of creating life has been present for centuries and despite many antecedents, it is commonly accepted (Bedau, M. A. 2003) that Artificial life was not a formal field of study until 1951, when one of the first academic Alife models was created by Von Neumann (Von Neumann, J. 1951) a Hungarian-American mathematician, physicist, computer scientist, engineer, and polymath. Von Neumann was regarded as the mathematician with the most comprehensive range of the subject in his time. Von Neumann published over 150 papers in his life about pure mathematics, applied mathematics, physics, and the remainder on special mathematical subjects or non-mathematical ones. His analysis of the structure of self-replication preceded the discovery of the structure of DNA. At that point, Von Neumann was trying to understand the fundamental properties of living systems, particularly self-replication. His interest in self-replication as the structural feature of life leads to the collaboration with Stanislaw Ulam at Los Alamos National Laboratory. Consequently, Von Neumann described the concept of cellular automata and proposed the first formal self-replicating system.

Further development of cellular automata continued in the 1970s and 1980s, the best-known examples being Conway’s Game of Life (Berlekamp, E. R., Conway, J. H., and Guy, R. K. 1982). Also known simply as Life, is a cellular automaton devised by the British mathematician John Horton Conway in 1970. It is a zero-player game, meaning that its evolution is determined by its initial state, requiring no further input. One interacts with the Game of Life by creating an initial configuration and observing how it evolves.

A contemporary of von Neumann, (Wolfram, S. 1983) developed computational models similar to cellular automata, although more focusing on evolution.

In parallel to mentioned studies, cybernetic studies control and communication in systems (Wiener, N. 1948). Cybernetics and systems research defined phenomena in terms of their function rather than their substrate and suggested that life should be studied as a property of form, not matter. Alife has strong roots in cybernetics. The fundamental concepts of Alife, such as homeostasis and autopoiesis were developed within and inspired by cybernetic approaches.

Homeostasis refers to any procedure that living organisms or agents utilize to actively maintain relatively stable conditions essential for survival. The term was coined in 1930 by the physician Walter Cannon. Also, the similar term autopoiesis refers to a system capable of building and sustaining itself by producing its own parts. While homeostasis echoes the resilience of a system. Autopoiesis describes the proportion between the complexity of a system and the intricacy of its environment.

One of the successful examples of Hard Alife is the “tortoises” robot designed by William Grey Walter Holland, O., and Melhuish, C. (1999), which can be considered as the early projects of adaptive robotics. The other example is when Stafford Beer developed a model for organizations based on the principles of living systems in 1966, his idea implemented in Chile during the Cybersyn project in the early 1970s (Miller Medina, E. (2005).

Alife has been closely compared and linked to artificial intelligence (AI) since there are some overlapping between subjects. However, the Alife is specifically focused and related to the study of systems that can resemble nature and its law, therefore it is more related to biology. On the other hand, AI mainly focuses on how human intelligence can be studied and replicated. Therefore, it is more related to psychology. The key difference is how they both model their strategies. Most traditional AI models are top-down specific systems that involve a centralized controller that makes the decision based on access to all aspects of the global state. However, Alife systems are typically bottom-up, based on implemented low-level agents that simultaneously interact with each other, and whose decisions are based on information about, and directly affect, only their local environment (Bedau, M. A. 2003).

Researches around Alife and related topics continued until the 1980s, However, Artificial life became a formal field of study when Christopher Langton originate the phrase “artificial life” in its current usage. Langton described artificial life as a study of life as it could be in any possible setting and organized the first workshop on the Synthesis and Simulation of Living Systems in Santa Fe, New Mexico. This event is explicitly recognized as the official birth of the field of Artificial Life. In addition, the scientific study of complex systems started approximately at the same in the same place by Carlos Gershenson, a Mexican researcher at the Universidad Nacional Autonoma de Mexico. His academic interests include self-organizing systems, complexity, and artificial life related to the understanding and popularization of topics of complex systems, in particular, related to Boolean networks, self-organization, and traffic control. Boolean network models were created to study complex dynamic behavior in biological systems. They can be used to solve the mechanisms regulating or controlling the properties of the system or to identify promising intervention targets (Schwab J.D., Kühlwein S. D., Ikonomi N., Kühl M. and Kestler H.A. 2020).

Artificial life took many different meanings before the 1980s until Langton originally defined the field as “life made by man rather than nature”. It is a study of man-made systems that exhibit behaviors characteristic of natural living systems. However, Langton encountered structural problems with his definition after a while and then redefined it as “the study of natural life, where nature is understood to include rather than to exclude, human beings and their artifacts” (Langton, C. G. 1998). With this definition, he considered human beings and all that they do as part of nature, and therefore, a major goal of Alife should be to work toward removing artificial life as a phrase that contrasts in meaning in any fundamental way from the term biology. Now it is fairly common for biologists to use computational models, which would have been considered as Alife 20 years ago, but now these models are part of the mainstream biology (Bourne, P. E., Brenner, S. E., and Eisen, M. B. 2005).

Back in the twentieth century, one of the early intellectual cornerstones of Alife was built by John von Neumann and Norbert Wiener, when Von Neumann designed the first artificial life model by creating his famous self-reproducing, computation-universal cellular automata. A cellular automaton is a regular spatial lattice of cells, each of which can be in one of a finite number of states. The lattice typically has 1, 2, or 3 spatial dimensions. Von Neumann tried to understand the fundamental properties of living systems, especially self-reproduction and the evolution of complex adaptive structures, by constructing simple formal systems that exhibited those properties. At about the same time, Wiener started applying information theory and the analysis of self-regulatory processes (homeostasis) to the study of living systems. The abstract constructive methodology of cellular automata still typifies much artificial life, as does the abstract and material independent methodology of information theory.

Current artificial life studies are related to several fields, and has the most effective overlap with complexity, natural computing, evolutionary computation, language evolution, theoretical biology, evolutionary biology, cognitive science, robotics, artificial intelligence, behavior-based systems, game theory, bio-mimesis, network theory, and synthetic biology.

SYNTHETIC ECOLOGY (MODEL V01)

I have always been mesmerized by generative arts, kinds of artworks constructed based on a system, or a set of rules that can produce some degree of autonomous procedure/self-govern to the creation process. My interest in generative arts increased while I began learning more about creative coding and studying broad and rich categories of art created with code as their central characteristic.

Despite the profound definition of generative art, my intention with generative art was straightforward. I was solely curious to introduce rules that provide boundaries for the creation process and then let the computer or any algorithmic equation follow the rules to generate new ideas, forms, shapes, colors, and patterns on my behalf.

After a series of studies/experimental projects on generative arts, I came up with the idea to work on a set of rules as the essence of my project. I wanted to extend my interest in creative coding and learn more about Artificial Life. I was looking for possibilities of generating visual art as a living computation beyond the chemical solution. My ultimate goal was to give the visual elements/components a certain degree of autonomy, and certain control over their own production to grow, develop and perform as a group of autopoiesis agents in real-time through algorithmic computer renderings. And most notably, fully removed my role as artist/creator from the creation equation and shifted to an observer who study the result of the creation and finds more possible rules to extend the system.

My initial idea to develop an artificial synthetic ecosystem lead me on creating probable Alife (Artificial Organisms). This ecosystem is created based on implanted collective data and the goal is to reengineering them to have new abilities and purposes. I am not looking for creating a project that simulate life/organisms digitally on the computer. Instead, my intention is to experience of the possibilities of generating a living computation beyond the chemical solution. Therefore, this project constructed for synthesizing Alife as software based on the collective data. I partly make the collective data by monitoring the underlying mechanics of biological phenomena/life and using genetic computational algorithms, but first let’s see what do we mean when we say life?

1-Regarding to Webster’s Unabridged 1913, “life is the state of being which begins with generation, birth, or germination, and ends with death; also, the time during this state continues; that state of an animal or plant in which all or any of its organs are capable of performing all or any of their functions”.

Although this definition is quite broad, it is not fully satisfying for my research. It addresses a description of what happens during a life, rather than sufficient conditions for what is means to be a life.

2-Regarding to Gerald Joyce, professor and researcher at Salk Institute for Biological Studies and the director of the Genomics Institute of the Novartis Research Foundation, life is a self-sustaining chemical system capable of undergoing Darwinian evolution.

This definition targets the system, sustaining, and organizing emphasis of chemist view who is best known for his work on in vitro evolution, for the discovery of the first DNA enzyme (deoxyribozyme), for his work in discovering potential RNA world ribozymes, and his work on the origin of life (Gerald Joyce 2010). Although Joyce definition is helpful to narrow down my description of being life and letting me have a better understanding of self-sustaining systems, still I’m looking for a way to get out of the chemical solutions, since my project at its core research is comprised of Soft Alife.

3- Regarding to Lee Smolin, theoretical physicist and professor of physics at the University of Waterloo, life is a self-organized non-equilibrium system such that its processes are governed by a program that is stored symbolically and it can reproduce itself, including the program.

This definition again targets the autonomously sustain and organizing system, and what triggers me most is how he emphasized the function of the program to govern and process.

Also, it is so complex to explain life when we’re dealing with symbolic representations of life as a program, but concerning Smolin as a physicist, who has made many contributions to quantum gravity theory, in particular the approach known as loop quantum gravity. He was more interested in the highest causes of life as a phenomenon in the frame of mathematical terms.

Smolin indeed manages a unique aspect of the validity by mentioning storing and representing the meaning of life symbolically, and it was enlightening for me to explore deeper into this topic, however, it seems rather super complicated as a concept to let me arrange my conceptual framework at this stage of my project.

But how can I boil it down to reach the simplest mathematical definition of life? That was my first struggling with this project for weeks to find out a big spectrum which can comprise a general definition of life and still have some descriptive value to let me describe and understand the features of specific phenomena and giving me enough freedom to summarize the data and make it measurable mathematically.

After diving into the notion of Artificial Life, reading papers on different topics related to Alife, finding many similarities, and trying to understand the essence of the most contemporary examples, what I realized was to treat the definition of life same as living phenomena that can develop through observation and examination, so I can make a dynamic foundation for my project.

And this brings me to my next point that is the more simplified version of common definition of life in Alife which says life is simply a system that consuming energy in order to maintain patterns.

There are lots of probabilities to dynamically preserve patterns without being life based on “Webster definition”. Salt crystals grow and cause more crystals to grow around them. They can maintain patterns without being life-like. Any circular movements of fluids causing even a small whirlpool can consume energy to maintain patterns, something extremely complicated that a slight change causes them to disappear. Many video games or simulations include animals that grow and reproduce and they can dynamically maintain patterns. are they really live? With this definition, wherever you look at in the world, if you see a pattern that’s being preserved dynamically and consuming energy in order to maintain a pattern, then you can see a bit of life and it can be represented by this spectrum. In other words, what I’m looking for by this definition is to make a big spectrum that can define life as a process and allows me to run these processes on a suitable platform under certain conditions, just like how a piece of software runs on hardware platforms. Therefore, the more general my definition and rules, there are more possible platforms I can use to maintain the patterns, or in this case (Soft Alife) functions.

There are many possibilities to design various models to examine my initial idea, however, since my goal is to develop an artificial synthetic ecosystem, I need to construct a specific research method by arranging explicit rules/protocols that be able to give the components/organisms a certain degree of autonomy, a certain control over its own production to grow, develop and perform as group of autopoiesis agents, and finally a certain degree of adaption to survive. In “software” Alife, living systems can achieve these goals through self-organization.

Self-organizing

Regarding Kepa Ruiz Mirazo a researcher of the University of the Basque Country (UPV/EHU), and a physicist who has devoted most of his career to studying the interface between chemistry and biology, particularly interested in grounding the transition from one to the other, the basic autonomy is the capacity of a system to manage the flow of matter and energy through it so that it can regulate internal self-constructive and interactive exchange processes under far-from-equilibrium thermodynamic conditions (Ruiz Mirazo, K., and Moreno, A. 2004). Therefore, the concept of autonomy as applying to the process of self-organization that routes to artificial intelligence must be determined from the similar terms that are effortlessly used in robotics, which refers to the ability to move and interact with no dependency of remote control.

The notion of autonomy lets us deeply understand an individual system that is able to act in relation to its underlying goals and principles, a system that can be a genuine agent, rather than of being a system whose functions are heteronomously (subject to external controls and impositions) defined from outside (Barricelli, N. A. 1963). hence, one of the most important goals is to program a behavior-based system and letting the system anonymously prime its principles and develops through time, instead of micromanaging all the aspects of the system.

The term self-organizing was coined by W. Ross Ashby (Ashby, W. R. 1947), an English psychiatrist and a pioneer in cybernetics, who is widely influential within cybernetics, systems theory, and more recently, complex systems. the study of the science of communications and automatic control systems in both machines and living things. he described self-organizing phenomenon as where local interactions in systems lead to global patterns formation or behaviors, such as swarm, herds, and flocks’ behavior. Two common cases of self-organizing are self-replication and self-assembly. While self-replication is quite a simple act, only requires a replicator agent that can be spread and clone its organization by itself, the self-assembly is a complex behavior related to collective intelligence and requires cognitive science and predictions to unforeseen circumstances that can achieved by learning through sharing information, knowledge, and data between large group of individual agents.

One of the crucial features of a living system is adaption, and essential for any survival and maintain the self-organizing accomplishment. Carlos Gershenson defined adaption as a change in an agent or system as a response to a state of its environment, in order to survive, or achieve certain goals. Furthermore, Gershenson explained that adaption can be scaled by time and appear at various time scales. At a slow scale (several lifetimes), adaption is called evolution, at a medium scale (one life), it is called development (including morphogenesis and cognitive development), and at a fast scale (a fraction of a lifetime), adaption is called learning (Gershenson, C. 2010).

Most traditional hard and soft Alife systems suffer from a lack of genuine interaction and adaptability as we can find in natural phenomena, instead, they were designed based on the ability to predict and control. Although genuine adaptability still is a desirable property in contemporary artificial life studies, different degrees of adaption can be achieved by synthesizing computer science (evolutionary computation), cellular growth seen in nature (biological development), developmental processes related to cognition (cognitive development), and field of machine learning (Artificial neural networks).

Getting a deeper insight into this topic, artificial evolution is highly advanced from genetic algorithms in computer science, and the broad field of evolutionary computation to achieve computational intelligence. The primary motive for using evolutionary algorithms is to search for sufficient and practical solutions for problematic situations that are too complex to examine with more traditional Alife methods. For instance, soft Alife systems such as Tierra (Reynolds, C. W. 1987), and Avida (Ofria, C. A. 1999) both have been used to study the evolution of “digital organism” by using evolutionary algorithms frameworks for understanding features of living systems such as robustness, the evolution of complexity, the effect of high-mutation rates, the evolution of complex systems, mass extinctions and ecological networks.

Tierra and Avida were both my source of motivation when I started working on my protocols. Tierra is a computer simulation developed by ecologist Thomas S.Ray in the early 1990s. Tierra is written in the C programming language. It is an evolvable system capable of adaptive evolution and can be mutated by self-replicating and improving itself through time. (“Tierra (computer simulation)” 2014).

Avida is also an Alife system inspired by the Tierra system. it is designed more as a platform to study the evolutionary biology of self-replicating and evolving computer programs. The first version of Avida was designed in 1993 by Ofria, Chris Adami, and C. Titus Brown at Caltech, and has been fully re-engineered by Ofria multiple times since then. (“Avida” 2014).

Although the system I’m developing has a much-simplified arrangement of evolutionary algorithms frameworks, still studying how these two systems developed encouraged me to dive deeper into the topic, set SMARTER goals, and create more specific plans to extend my system.

About SYNTHETIC ECOLOGY Protocols

My core research is divided into three domains. First, arrange a series of protocols as the foundation to my code/system, examine the outcomes by giving the system enough time to run the computations, and finally explore and analyze the result to extend protocols more until I can create a synthetic ecosystem. My ultimate goal for organizing these protocols is to explore the possibilities to have a deeper understanding of Alife system design and create an ecosystem based on agents that will be able to constantly rebuild itself and maintain a pattern by exchanging materials with the surrounding environment. It should respond to stimuli, adapt to its environment, reproduce, and transfer imperfect information to its child/offspring.

These protocols are mainly related and inspired by the most commonly classified themes of Artificial Life that I found fascinating and valuable for my project during my study on Alife. Themes such as origins of life, autonomy, self-organization, adaptation (evolution, development, and learning) encouraged me to have a deeper look into autonomy in design which is more concentrated on properties of the living system. Also, by looking into some examples/papers about ecology, artificial societies, computational biology, and artificial chemistries I could expand my initial idea of the living system toward the concept of life at different scales. Ultimately information theory and living technology are both my main study at the moment to grow my understanding/descriptions of living systems and prepare myself for the next step of this project.

As an applicable/hands-in aspect of my project, I need to examine my method/protocols by arranging a living model and discovering methods to observe/monitor the outcomes. Then I can introduce more complex subprotocols to the system and let the model extends and the agents find their own aim to develop and survive. Also, it is important to highlight that surviving is not the ultimate goal of the first model, the model V01 aims to analyze time-to-event historical data (TTE Data). Analyzing TTE Data depends on a complex statistical framework and the outputs results are only valid when the data meet certain assumptions. Therefore, the outcomes/hypotheses are not only about whether or not an event occurred but also show when and how that event occurred. These techniques combine data from multiple time points of each life cycle and can anticipate partial information on each subject with filtered data and provide impartial survival estimates for each element with calculated rates, time ratios, and hazard ratios. In short, if the model works adequately then it will be able to generate estimates of survival plans for each agent/element by running enough computations. So, it can slowly learn and demonstrate how the probabilities of the events occurring change over time.

Protocols

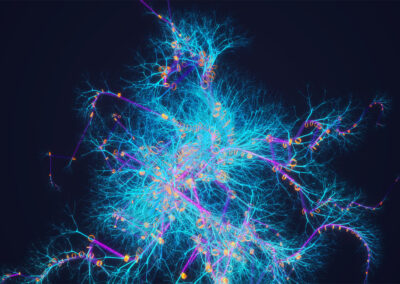

1-Alife organisms need to interact with each other to construct their ecosystem through their connection portals.

The interaction of individuals of the same species defines societies. The computational modeling of the social system enables the systematic exploration of possibilities of social interaction. There are two common approaches for modeling the social interaction, AI and Alife approaches.

AI approaches for synthesizing behavior are based on the sense-model-plan-act architecture, which is specified to take place inside an agent-independent behavior that has more to do with logical assumptions based on internal representation rather than with interacting with the world in real-time. Alife has closely related to the notion of life with biological embodiment and its environment, it prefers to synthesize behaviors for studying the condition of emergence rather than pre-specified the behaviors. This leads to the study of adaptive behavior, mainly based on ethology (scientific study of animal behaviors) and dynamics of closed sensorimotor loops.

My approach leans more towards the Alife, rather than AI, and the connection portal is one simplified possibility to achieve adaptive behavior in the system, it is a form of binding parameters that can hook to another behavioral parameter. The connection is bidirectional; however, it eventually leads by the one that moves faster (stronger). Since this system gradually grows and introduce more agents to the system, keeping the communication (data transfer) requires a massive computational resource on CPU, so as solution I designed a type of barcode communication that can easily monitor and transfer data between all the agents without the needs of massive computational resources.

2-Behavioral parameters are fundamental properties of organisms and achieved by learning. These properties are protected as part of the memory.

Learning is the core behavior of adaptive living systems, and changes in an organism’s capacities or behavior brought by experience (Wilson and Keil, 1999). Although there are several Alife approaches for modeling the learning experiences, most of the early achievements are influenced by the fields of machine learning, explicitly biological neural networks that led to the well-known Alife approach to learning so-called Artificial neural networks, and reinforcement learning that established based on the behaviorist psychology which explained how adaption occurs through environmental interaction.

My method is much more simplified of reinforcement learning, in this method, the agent learns to achieve a goal in an uncertain, potentially complex environment, the goal is maximizing the total rewards and save them as history (barcodes) in the memory. Therefore, the memory gradually including the artificial genome as the instruction model to develop and hold survival patterns. Each Alife/organism can be regarded as an individual dynamical system only if they can grow adequately and the dynamical system would be able to self-replication if finding a suitable environment. The genome defines the limitation of self-replication processes (quantities and qualities). Alife/organism reproduces by cell division while instancing.

The well-developed memory would be able to characterize states/prediction models such as fear, desire, and escape in some degree.

3-Every digital organism has its own independent territory of memory (As GPU/CPU based DATA). The territory might be protected as a private component (Encrypted DATA). It’s not possible to delete, but it’s removable from an agent to its offspring.

4-The memory is expandable, the only limit is the capacity of the main virtual memory (the main virtual memory is limited to hardware but upgradable).

5-Each organism can only execute algorithms that are built into its memory. Organisms cannot access directly into other agents’ memory.

6-Each organism has a running distinct executable algorithm speed.

As microscopes enable microbiology, computers have empowered the study of complex systems with the help of many non-linearly interacting components and have shaped the fields of Alife computational models and system biology. Computers allowed Alife computational models to study the complexity of biological systems by measuring the transmission, storage, and manipulation of information at different scales, as they are essential features of living systems. Since my ecosystem mathematically living based on the computational resources or CPU and GPU memories, these resources/memories should be contributed logically to all the components/agents to let the system grow and scale inside of the specified boundaries to avoid any odd mutations or feedback loops destroy the ecosystem. Therefore, the calculation speed limit added to this system.

The calculation speed is confined to three factors.

6a- Capacity of the main virtual computational resources (CPU power) dedicated to the whole system.

6b- Number of tasks (action/reaction) that the organism allows performing. (This limitation corresponds to the capabilities of each individual’s memory).

6c- Number of connection portals that the organism developed.

Model V01 (Organisms that can adapt -versus- organisms that can react)

Life is simply a system that consumes energy in order to maintain patterns

With the first model, I tried to create a controlled or almost isolated environment for growing underwater moss on a basalt/bedrock. This model was created to investigate three research methods.

1- built based on the mentioned conceptual framework to test the utility of protocols and the system design.

2- For finding affordable ways to monitor/observe the outcomes based on TTE Data and discover more possibilities for extending my main idea/the Alife ecosystem.

3- To explore my research topics, and chop them into more specific questions/topics based on the outcomes.

This model is an exclusive piece of the ecosystem, and a crucial part of any ecosystem is the plant growth in them. Among many plants, I choose moss for the first model since mosses are the first identified creatures that gave the earth its first fresh breath of clean air and made life possible for all the next creatures. My artificial moss has the same role, it needs to find its way to develop colonies and produce oxygen to the environment, however, oxygen is a byproduct of photosynthesis and requires ambient light, and enough provided nutrients. Mosses have primitive roots called rhizoids that are only one cell thick and hold the plants in place on bedrocks and absorb all the necessary nutrients (in this model nutrients can be considered as energy). So even in this most simplified life scenario, there is complex challenge of consume energy between the elements. The plants’ roots extracted nutrients from bedrock, leaving behind vast quantities of chemically altered rock that could react with CO2 and so suck it out of the atmosphere and reduce the oxygen. Non-vascular plants like mosses don’t have deep roots, so it was thought that they didn’t behave in the same way. However, after many examinations, it’s proved that a common moss (Physcomitrella patens) can inflict on rocks. After almost 130 days, rocks with moss living on them had weathered significantly more than bare ones – and about as much as they would have if vascular plants were living on them. The secret seems to be that the moss produces a wide range of organic acids that can dissolve rock.

Therefore, even there are only three elements/agents provided in this model (1- Rock/basalt as the platform 2- One cell of Moss 3- Lights) still they need to either find a way to fight for their own survival which cause to eliminate others and collapsing the system, or adjust their growth patterns/behaviors to avoid overlapping with other interests and harmonize the stability of each life cycle.

Each of these elements has a memory. They can learn or save sequences of events and use them to create strategies to survive, and discover a method to interact with other elements and adapt with environment. As I mentioned, this system is not a simulation. Instead, it’s based on synthesizing behaviors for studying the condition of emergence rather than pre-specified the behaviors. Although this model has a straightforward storyline, at the back-end, it’s extremely complicated and unpredictable.

Are they initiate adaptive behaviors, and form sorts of closed sensorimotor loops to create a balanced environment, or do they gradually lean towards the odd survival modes and demolish the ecosystem?

These are the questions I need to examine by giving the system an adequate amount of time for running and finding its purpose. My role during this process is to observe and analyze all the motives by studying outcomes and discovering possibilities to extend the system.

Analyzing the Outcomes

Quite similar to machine learning approaches, my system needs to be trained as well. This training procedure can be achieved by constantly running the same scenario/equation to collect enough memory/data. So, the system can gradually be able to predict the consequences of each decision. Considering a simple system like what I’m trying to develop, it can take thousands of attempts to fill the memory with adequate behaviors patterns before the system can show some spikes of meaningful determinations. During my study, I faced many difficulties and challenges to grow this system, however, one of the most complicated issues was to find a way to collect and monitor all of these massive memories/DATA, and apply a practical method for learning through experiences to my system. For instance, each try can generate thousands of findings inside of the system, and depends on the duration of each life span, the outcomes can be exceeded more than twenty gigabytes of raw data for ten hours of living computation. So, no matter how much H.D.D (Hard Disk Drives) memory I add to my database, I won’t be able to train and extend this system sufficiently during my lifetime. Since for running each life span, the system needs to review all the previous findings, therefore by recording more additional data to the database, very soon it will encounter the reading/writing speed limit and reach a certain point that each try can takes weeks or even months to be finished.

Although technically there are many advanced coding solutions to solve this memory management issue, I was not considering this problem as only a technical issue but more as an in-depth conceptual challenge around the learning phenomena. This challenge put my project on pause and by some means reminded me of my struggle with learning when I was a child. I suffered from a complex learning disorder during my childhood. This condition prevented me from presenting any primary subjects without memorizing all the text word by word. The anxiety on my mind for memorizing everything reached a certain point that eventually caused me to stutter until the age of twelve. Metaphorically I assumed the learning/memory management issue with my system somehow related to a reflection of my childhood memories into the ecosystem I want to create. And the explanation to decode it should come from my vantage point even if I end up with an irrelevant strategy to frequent programming methods.

In my adolescence, one of the solutions I discovered to conquer my stress and manage my mind was to draw fictional diagrams for each concept that I wanted to learn. By simplifying and abstracting each topic visually, and making associations meaning, I came up with a graphical learning system to not only manage my thoughts, but also as a mental game for brainstorming. Inspired by the outcomes, later I developed and utilized this system in different ways on my coding projects to manage the complicated workload. So, I thought it would be valuable research if I can make a similar graphical system to instruct my ecosystem to learn from experiences and judge each probable result before taking any action.

Delve into this topic, instead of recording all the memory/data and micromanaging each step, I simplified all the measures into three categories, simple, intermediate, and complex behaviors with specific time horizons (length of time over which behavioral responses is started and finished).

1- Simple actions are merely reactive/reflexive caused by a cue or interaction from another agent on a short-time horizon. Similar to Hydra (tiny aquatic animals that look like floating tubes with arms)

2-Intermediate actions are exploratory and motivated, usually occurred when an agent seeks a certain purpose to persist, established by strategic goals on a medium-time horizon. Similar to bony fish or rat.

3-And finally, complex actions are executive and driven, happen on a long-time horizon, and have objectives target of attention. Similar to monkey.

By categorizing all the inner activities of the system, I assembled a filter to only capture behaviors related to the learning process. But still part of the memory management issue remained unsolved, and that’s how my old graphical system became handy. Any string of data in my system consists of an array data structure of bytes that store a sequence of elements encoded into numeric data. Each activity can be recorded like a history/memory as strings. There are many programming approaches to convert data from one form to another, for instance, we can convert numeric data to pixels and pivot the calculation load from CPU (Central Processing Unit) to modern GPU (Graphics Processing Unit) which is much quicker and manageable.

Imagine a barcode, a very small image (sometimes 1 kilobyte) consisting of parallel back and white bars that can be read by a barcode scanner. My approach to collecting and analyzing memories was similar to the barcode system, but instead of encoding data to black and white bars, I made an internal system to generate graphs based on the mentioned category of each activity, and possess the time horizon and score. These graphs represent when and how that event/action occurred with details such as calculated rates, time ratios, and hazard ratios to provide unbiased survival estimates for that specific action. These graphs are very small in size and do not require a massive database to collect, and primarily can be analogized by GPU algorithms. Therefore, each agent will be capable of reviewing consequences by analyzing prior scores for similar actions and gradually attaining critical thinking and elevating its action from Simple category toward more meaningful determinations.

The other notable advantage of using these graphical memories is a real-time access to a relatively precise estimate of the learning improvement, during the training cycles. By comparing the quantity of complex, and intermediate actions per cycle, I managed an analyzing system to indicate whether the agents acted more intelligent as the system got more aged. Or outcomes are virtuously randomized by a vast number of variables. Considering the restricted structure of my rules made no room for any glitches or mutations, my studies/tries over the last five months showed 3.2% intelligence improvement among developer agents, which is a significant result in a short period.

During my studies on outcomes, I realized that although the general inner activities slightly leaned over to find a balance as the system got older, still, there is competition over accessing more resources between developing agents that are accountable for evolving the ecosystem. This competition mostly is a mixture of intermediate actions, and so far, I couldn’t find any glimmer of complex behaviors. And this leads me to the next step of this project, which is designing strategic rules/protocols that push the competitive relationship more than the well-known survival scheme in this synthetic community. Changing rules in this setting can enormously alter the path of developing the ecosystem, and it can be a prospect to study/practice and eventually form my own evolution theories. Examining natural phenomena assists me to have a considerably clearer view of how self-sustaining systems work. But since in this project, I have no obligation to follow how precisely the nature sorts things out. I can redefine concepts/ideas that are associated with my project to reconstruct the validity and reliability of data, and conduct my system toward exploring unknown zones, beyond underlying assumptions about the essence of reality, synthetic and nonsynthetic nature.

Nikzad Arabshahi, 2022, Den Haag, The Netherlands

Resources

Ashby, W. R. (1947b). Principles of the self-organizing dynamic system. J. Gen. Psychol. 37, 125–128. doi:10.1080/00221309.1947.9918144

Avida. (2014). In Wikipedia https://en.wikipedia.org/wiki/Avida

Bedau, M. A. (2008). “What is life?,” in A Companion to the Philosophy of Biology, eds S. Sahotra and A. Plutynski (Oxford, UK: Blackwell Publishing Ltd), 455–471.

Bedau, M. A. (2003). Artificial life: organization, adaptation, and complexity from the bottom up. TRENDS in Cognitive Sciences. (Regul. Ed.) 7, 505–512. doi:10.1016/j.tics.2003.09.012

Berlekamp, E. R., Conway, J. H., and Guy, R. K. (1982). Winning Ways for Your Mathematical Plays, Volume 2: Games in Particular. London: Academic Press.], and Wolfram’s elementary cellular automata [Wolfram, S. (1983). Statistical mechanics of cellular automata. Rev. Mod. Phys. 55, 601–644. doi:10.1103/RevModPhys.55.601

Bedau, M. A. (2003). Artificial life: organization, adaptation, and complexity from the bottom up. Trends in Cognitive Sciences. (Regul. Ed.) 7, 505–512. doi:10.1016/j.tics.2003.09.012

Bourne, P. E., Brenner, S. E., and Eisen, M. B. (2005). PLoS computational biology: a new community journal. PLoS Computational Biology. 1:e4. doi:10.1371/journal.pcbi.0010004.

Barricelli, N. A. (1963). Numerical testing of evolution theories. Acta Biotheoretica. 16, 99–126. doi:10.1007/BF01556602

Gershenson, C. (2010). Computing networks: a general framework to contrast neural and swarm cognitions. Paladyn 1, 147–153. doi:10.2478/s13230-010-0015-z

Gershenson, C. (2010). Computing networks: a general framework to contrast neural and swarm cognitions. Paladyn 1, 147–153. doi:10.2478/s13230-010-0015-z

Gerald Joyce. (2010). In Wikipedia. https://en.wikipedia.org/wiki/Gerald_Joyce

Hutton, T. J. (2010). Codd’s self-replicating computer. Artificial Life. 16, 99–117. doi:10.1162/artl.2010.16.2.16200

Holland, O., and Melhuish, C. (1999). Stigmergy, self-organization, and sorting in collective robotics. Artificial Life. 5, 173–202. doi:10.1162/106454699568737

Julian D.Schwab, Silke D.Kühlwein, Nensi Ikonomi, MichaelKühl, Hans A.Kestler (2020). Concepts in Boolean network modeling: What do they all mean?. Computational and Structural Biotechnology Journal, Volume 18, 2020, Pages 571-582

Langton, C. G. (1990). Computation at the edge of chaos: phase transitions and emergent computation. Physics D 42, 12–37. doi:10.1016/0167-2789(90)90064-V.

Langton, C. G. (1998). A new definition of artificial life.

Mazlish, B. (1995). The man-machine and artificial intelligence. Stanford Humanities. Rev. 4, 21–45

Miller Medina, E. (2005). The State Machine: Politics, Ideology, and Computation in Chile, 1964-1973. PhD thesis, Cambridge, MA: MIT.

Ofria, C. A. (1999). Evolution of genetic codes. PhD thesis, California: California Institute of Technology.] [Ofria, C. A., and Wilke, C. O. (2004). Avida: a software platform for research in computational evolutionary biology. Artificial Life. 10, 191–229. doi:10.1162/106454604773563612

Ruiz-Mirazo, K., and Moreno, A. (2004). Basic autonomy as a fundamental step in the synthesis of life. Artificial Life. 10, 235–259. doi:10.1162/1064546041255584

Reynolds, C. W. (1987). Flocks, herds, and schools: a distributed behavioral model. Computer Graphics. 21, 25–34. doi:10.1145/37402.37406

Tierra (computer simulation). (2014). In Wikipedia https://en.wikipedia.org/wiki/Tierra_(computer_simulation)

Von Neumann, J. (1951). “The general and logical theory of automata,” in Cerebral Mechanisms in Behavior-The Hixon Symposium, 1948 (Pasadena CA: Wiley), 1–41.

Von Neumann, J. (1966). The Theory of Self-Reproducing Automata. Champaign, IL: University of Illinois Press.] – [Mange, D., Stauffer, A., Peparola, L., and Tempesti, G. (2004). “A macroscopic view of self-replication,” in Proceedings of the IEEE, Number 12. IEEE. 1929–1945.

Wood, G. (2002). Living Dolls: A Magical History of the Quest for Mechanical Life. London: Faber.

Wolfram, S. (1983). Statistical mechanics of cellular automata. Rev. Mod. Phys. 55, 601–644. doi:10.1103/RevModPhys.55.601

Wiener, N. (1948). Cybernetics: Or, Control and Communication in the Animal and the Machine. New York, NY: Wiley and Sons.

Wilson, R. A., and Keil, F. C. (eds) (1999). The MIT Encyclopedia of the Cognitive Sciences. Cambridge, MA: MIT Press.